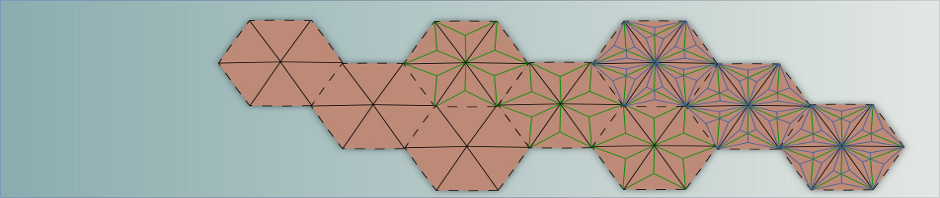

So I finally got around to releasing some code I wrote a few months ago. It’s basically an implementation of “Implicit Fairing of Arbitrary Meshes using Diffusion and Curvature Flow” by Desbrun et al. However, the interesting part about this implementation is that it uses a very efficient method to solve the linear system required by this approach. One of the main ideas put forth in this paper is to replace the common approach to mesh fairing, which relies on integration via the forward Euler method, with an approach which relies on integration via an unconditionally stable backward Euler method.

Imagine we have a set of vectors of length

; representing the

,

, and

coordinates of our mesh. The standard approach to smoothing our mesh using some differential operator matrix

(e.g. some formulation of the Laplacian) is to apply the following formula:

| |

(1) |

This tells us how we obtain the coordinates of the vertices at timestep from the positions of the vertices at timestep

. The main problem with this approach is that to ensure stability, we must choose a sufficiently small

. However, the value of

directly affects the amount of smoothing performed in every iteration. Thus, the smaller we need to make

for the sake of stability, the greater the number of smoothing iterations we must perform to obtain the desired amount of smoothing.

Desbrun et al. get around this problem by replacing equation (1) by the following equation:

| |

(2) |

This should look familiar; if we set ,

, and

, then we can see that, just like in our introduction to linear algebra course, we want to solve

. Intuitively, equation (1) says we take the vertex positions at the current timestep

, and apply the smoothing process to obtain the vertex positions at the next timestep

. Equation (2), on the other hand, asks, what must the vertex positions at the next timestep

be such that by applying the “inverse” of the smoothing process (i.e.

instead of

) to these positions we obtain the vertex positions at the current timestep

.

Now, instead of simply applying the smoothing operator as in equation (1), we have to solve a linear system of equations. There are many ways we can tackle this problem; each with certain benefits and detriments. However, a particularly practical and fast approach is to solve the system iteratively. In fact, this is what Desbrun et al. suggest in the paper, where they solve (2) using a PBCG solver. However, Botsch et al. in “Efficient Linear System Solvers for Mesh Processing” show us that for many problems, direct solvers can be much more efficient than iterative solvers. In particular, in situations where a set of linear systems with the same non-zero structure need to be solved, approaches which reuse the factorization can be much faster.

Luckily, our problem presents one of these situations. Since the non-zero structure of the matrix is the same for each smoothing iteration, a symbolic factorization of the necessary matrix can be performed only once, and reused for for all subsequent iterations. My direct solver implementation of the smoothing algorithm makes use of the symbolic Cholesky factorization of the differential operator matrix. This means that for all but the first iteration, only the numeric factorization and back substitution need to be performed to solve the linear system. My code provides implementations of implicit smoothing using both an iterative Precomputed Bi-Conjugate Gradient (PBCG) solver as well as a direct solver making using the Cholesky factorization of operator matrix. Compare them yourself to see the speed difference! If I have time in the future, I’d like to add an implementation which solves the linear system iteratively on the GPU. There are a number of nice libraries out there which provide PBCG implementations for GPUs, and it would certainly be interesting to see how an implementation using one of these solvers would compare against either of the CPU based approaches.

Let me know if you find my code useful or cool; or if you find any crazy bugs still lingering in there. I have more fun stuff to release in the future, but it requires some preparation first and I’m short on free time right now. Hopefully, however, I’ll find enough to post again soon.