Introducing RapMap

I’ve recently been working with my student Avi Srivastava on a new project, RapMap. RapMap is a tool for mapping (see below for the distinction between mapping and alignment) sequencing reads to a transcriptome. It currently implements two related but distinct mapping strategies, which I refer to as pseudo-mapping (this is no different from pseudo-alignment, an idea developed by Bray et al. as described below, but I will use the name pseudo-mapping for consistency) and quasi-mapping. A pre-print is forthcoming that will describe the tool, methods and results in further detail. However, in this post, I want to describe the main ideas and motivation behind this project, and provide a few of the results that we found so astounding.

Alignment vs. Mapping

First, let me attempt to explain what RapMap is not. This will require me to draw a distinction between alignment and mapping. Read alignment produces a nucleotide-to-nucleotide correspondence between each query sequence it deems sufficiently similar to some subsequence(s) of the reference. The result of computing such alignments is a collection of locations in the reference, as well as a specification (most commonly represented as a CIGAR string) of the correspondence between the query and the subsequence of the reference at this location that yields the smallest cost (or, equivalently, the highest score) under some specified model of sequence distance (or similarity). Such alignments are the de facto currency of many higher-level genomic analyses. It is important to note that the most common read aligners (Bowtie 2, BWA, STAR) are not exhaustive; that is, they do not guarantee that they will discover every subsequence of the reference that is within the specified distance of the query. Though such exhaustive or fully-sensitive tools do exist, they tend to be substantially slower, and are used primarily for tasks in which finding every admissible alignment is essential.

In comparison to alignment, mapping is the determination of the set of locations in the reference that match the query sequence (i.e. the read) “sufficiently well”. Here, I intentionally leave the term “sufficiently well” somewhat vague, as the problem is less well-studied than alignment and a reasonable determination of what constitutes “sufficiently well” is still a reasonable topic for discussion and debate. The key difference between mapping and alignment, as described here (again, I note that these terms are often used interchangeably in the broader community), is that mapping need not, and typically does not, compute or report a nucleotide-to-nucleotide correspondence between the query and locations on the reference. Avoiding this computation provides hope that reads can be mapped much faster than they can be aligned and, indeed, this seems to be the case.

RapMap is a read mapper. Given a reference (in this case, a transcriptome) and set of sequencing reads, it computes the likely loci of origin of each sequenced read with respect to the reference. The question, of course, is how to compute these locations rapidly and accurately. RapMap implements two distinct but related approaches, both of which achieve these goals in spades.

What’s in RapMap?

The first algorithm provided by RapMap is an independent re-implementation of the method of pseudo-alignment (herein called pseudo-mapping) that was first introduced by Bray et al. in the pre-print for the RNA-seq quantification tool Kallisto. Re-implementing a method is a fantastic way to understand how it works, and the initial motivation behind writing the first part of the RapMap coe was to really grok the innards of pseudo-mapping by implementing it. A fringe benefit of this is to provide an implementation of this idea under a different license (currently RapMap is licensed under GPL) than that adopted by Kallisto, whose license was the cause of some debate (a blog post by Lior Pachter, two by Titus Brown (1), (2), and two over at homolog.us (1), (2) ) a few weeks back. While the details of our re-implementation differ in some places from the implementation provided in Kallisto, we neither expect nor observe that these differences have a substantial effect on the speed or quality of the pseudo-mapping process. Thus, I refer the curious reader to the Kallisto pre-print (and the even-more-curious reader to the Kallisto and RapMap source code) for a more thorough description of pseudo-mapping.

The second algorithm provided by RapMap — quasi-mapping — is a novel one [1]though the name was suggested in a tweet at some point around the “Biology of Genomes” meeting in early May, along with a somewhat humous plot. I don’t remember the author of the … Continue reading, and thus the one upon which I wish to focus in this blog post. It is inspired by some ideas from the venerable STAR aligner, and from understanding how to exploit the special structure of the transcriptome to allow fast mapping. I will provide a high-level overview of how quasi-mapping works but, again, the forthcoming pre-print and the RapMap software itself will be the best place to understand the requisite details.

Quasi-mapping (from 30,000 feet)

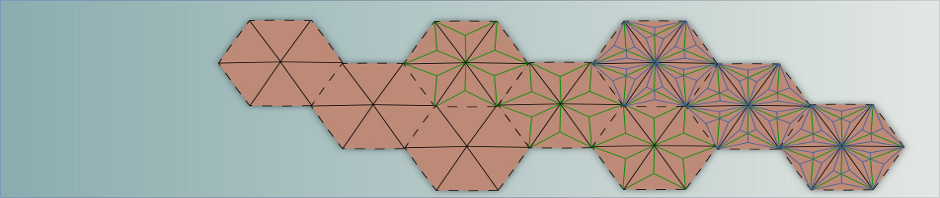

The core data structures exploited by quasi-mapping are a generalized suffix array (SA) of the transcriptome, and a hash-table mapping fixed-depth suffixes (k-mers) from the reference to their suffix array intervals. The hash table isn’t strictly necessary for the method to work — everything about the algorithm I describe below could be done with just the suffix array — but pairing the suffix array with a k-mer hash table to reduce the interval on which binary searches need to be performed can improve speed substantially. The hash table provides an expected lookup that allows one to retrieve, for any k-mer, k, the suffix array interval

where it occurs, while the suffix array allows

lookup for any substring of length m, where

is the length of the concatenated text, T, on which the generalized suffix array is constructed. That is, for an arbitrary pattern p of length m, we can find

in

time. It’s worth noting that the suffix array search can be improved to

using an auxiliary data structure (the LCP array), and that the “simple accelerant” of the suffix array search (described in Gusfield’s classic text) allows one to achieve search speeds practically comparable without the need for the auxiliary LCP array. Further, pairing the hash table with the suffix array ensures that any pattern of length m that begins with a k-mer in the hash can be found in

time (which, due to the simple accelerant mostly becomes

in practice). I also note here that a few more neat tricks (e.g. super-fast rank operations on a bit array, as provided by the method of Vigna) are necessary to yield a fast and practical index for quasi-mapping.

Given this index, the core algorithm behind quasi-mapping can be described based on two simple concepts; the maximum mappable prefix (MMP) and the next informative position (NIP). I will describe these concepts, and then how they are used in the quasi-mapping algorithm. A maximum mappable prefix, p, is a prefix of some suffix of a query that cannot be extended further without the size of going to 0. In other words, it is the longest prefix of a given suffix of the query for which there is at least a single occurrence in the reference. Given a query q, the the MMP of q, and its suffix array interval, can be found with two slightly modified suffix array searches. That is, we can determine the maximum length prefix of q appearing in our reference, and the corresponding interval in the suffix array, almost as quickly as we can determine the suffix array interval for a pattern of a fixed and pre-specified size.

Consider a query q, with MMP of p, corresponding to a suffix array interval . By the definition of the MMP, we can not extend p any further without introducing a mismatch between the query and reference. However, it is quite possible (and often turns out to be the case in practice) that

and

share some prefix of length

— that the bounds of the suffix array interval where we end up after searching for the MMP of q share a longer prefix than the query string which led us there. In this case, we define the next informative position (NIP) on the query as

, where

is the position on the query where the current maximum mappable prefix begins, and

denotes the longest common prefix function. In words, the NIP at a position

on the query is simply

plus the length of the longest common extension of the suffix array boundaries for the maximum mappable prefix that begins at

. Equipped with these definitions, we are now ready to discuss the quasi-mapping algorithm.

For the sake of simplicity, I will describe the situation in which all “hits” within a read (described below), occur in the forward direction with respect to the reference. The technical details for handling reverse-complement mappings are important, but rather trivial. Consider mapping a read r against the reference using the index described above. We begin at the left end of the read, looking, in turn, at each k-mer, until we find one that is present in the k-mer hash we’ve built. Call this k-mer w, and imagine that it constitutes the first k bases of some suffix p of the read. At this point, we find the corresponding suffix array interval , and perform the requisite search in this interval to determine the maximum mappable prefix of p (the suffix of the read that starts with the k-mer, w, we have queried). Since all suffixes in the suffix array that begin with some length

extension of w must reside in a (not necessarily proper) sub-interval of

, we can restrict the binary search to this interval, making it a

operation rather than a

one. Let p’, some prefix of p, be the maximum mappable prefix we have determined, and let

. Finally, we compute

. Now, rather than move by a single nucleotide to the next k-mer to continue our search, we instead move to the position in the query that resides k-1 bases before

. That is, the next k-mer we consider will be the rightmost k-mer that is not contained within the substring of the query that starts at

and ends at

. As it turns out, the distance between

and

is often quite large meaning that, on average, we have to perform this process very few times before we have “covered” the entire read. Once this process is complete, we have a collection of suffix array intervals (these are the “hits” mentioned above) — one corresponding to each MMP we consider — which can then be easily processed to extract the transcripts and positions to which this read maps. Specifically, to generate a set of mapping positions, we find the set of transcripts that appear in every hit, and find the positions on these transcripts that are (approximately) consistent with the distance between the corresponding MMPs in the query.

Modulo some optimizations and book-keeping details, that’s it. The quasi-mapping procedure simply builds up, for each read, a collection of these “hits”, and then processes them to extract the mapping locations for each read. Paired-end reads are mapped by filtering the mapping locations for each read to find consistent (concordant) mappings for the pair. So that’s a high-level overview of the algorithm. The tool itself, RapMap, is also dead-simple to use. It exposes 4 commands ,

,

and

— you can guess what each does. The standard process is to build the appropriate index on a target transcriptome using the corresponding “index” command. Then, given a collection of sequencing reads, the “map” command can be used, along with the pre-computed index, to map reads fast . . . really, really, really, fast. How fast? Let me give you some numbers from preliminary testing. First, on my iMac, it takes ~2 minutes (141s) to build the quasi-mapper index on the hg19 transcriptome. The majority of the time spent indexing is, in fact, just spend in the suffix array construction algorithm (using the popular SAIS implementation of Yuta Mori). While 2 minutes for index building on the human transcriptome probably isn’t something one should even bother optimizing, there are some interesting looking suffix array construction algorithms that are potentially faster.

Letting RapMap stretch its legs

Now, we consider mapping 25 million 76bp paired-end reads (generated with an empirical error distribution) from a synthetic dataset with a substantial amount of true multimapping (i.e. reads mapping to multiple, sequence-identical positions on the transcriptome) using an increasing number of threads. The timings come out as follows (“no output” here refers to the fact that these runs check just the time required to do the mapping or alignment, not to dump their giant files to disk):

STAR parameters were adjusted appropriately for mapping directly to the transcriptome (i.e. to not allow introns etc.), as this timing result is much more fair (in my opinion) than forcing it to do spliced mapping to the genome. Both STAR and Bowtie 2 were allowed to output up to 200 multi-mapping locations (though they, like RapMap, often find far fewer than that man). Now, the interesting thing here (to me, at least) is that RapMap finishes this job with a single thread substantially faster than STAR completes with 8 threads (~5 minutes vs ~25 minutes). With 8 threads, RapMap takes ~1.5 minutes — about 17 times faster. While STAR’s speed would likely come down even further with more threads, we hit a (very artificial) wall with RapMap because, as the number of threads increases, the time required to load the index becomes an increasing fraction of the total runtime (the mapping itself takes only 37 seconds, and faster index loading is a feature high in our priority queue). Of course, with larger read sets, this “problem” goes away, and RapMap took ~1m to compute the mappings for 75 million reads (paired-end, same length) with 15 threads. In fact, the real bottleneck to speed is simply writing the output (the timings discussed above are with respect to STAR’s “–outSAMmode None” flag, RapMap’s “-n” flag, and piping the output of Bowtie2 to /dev/null, since it doesn’t directly expose the ability to disable output — this could partially account for the somewhat surprising timing results for Bowtie2), suggesting the utility of making use of RapMap’s quasi-mappings directly in memory via an API (which we’re currently working on), and potentially developing a much sparser output format (currently, it’s plain-old SAM). Though the wisdom of providing a custom output format is not obvious to me, it might be the case that we’ve entered such a substantially different class in terms of speed that there is justification for it — but I’m still very skeptical. It’s also worth mentioning here that this 25 million read dataset is a particularly “tough” one. STAR, for example, reports a 73.6% multi-mapping rate and an error rate (Mapped MMrate) of ~0.5%. I didn’t take the time here to run STAR and Bowtie2 with 1 or 4 threads, but you can extrapolate down what those times might be.

So the “take-home” message of the above table is that RapMap (and, by extension, quasi-mapping) is fast. Of course, it’s also unreasonably fast to read a file, write an output called results.sam, and return 0. What I’m getting at, obviously, is that the tests above suggest that quasi-alignment is, indeed, outrageously fast, but how accurate is it? Well, this data is synthetic, so we can check. It’s important to note here though that because of the nature of this data, assessing accuracy is a little bit tricky. We know the true origin of each read, but we don’t really want to penalize a method for finding hits that map to, say, the same exon as the true hit, but appear in a different isoform of the gene. So, in the results I present below, the following (somewhat liberal) rule is used to designate “True Positive”, “False Positive” and “False Negative”. A false positive is a read that is mapped (or aligned), but none of its mapping locations is the correct one. In this case, the entire group of alignments is taken as a single false positive rather than counting each, independently, as a false positive. A false negative is defined here as a read that doesn’t map (since each read in the synthetic data was generated from an mappable locus — modulo some minor caveats — this is just the total number of reads minus the number of reads mapped). Finally, a true positive is defined as a group of mappings for a read where at least one of those mappings is to the actual locus of origin. These metrics leave out an important feature, however. While it is, to some extent, acceptable to consider a read to have / be a true positive mapping if at least one of it’s mapping loci is correct, it also matters how much extra ambiguity is present. By this, I mean that if a mapper / aligner reports 3 mappings for a read, one of which is true, this is in some sense better than reporting 10 mappings for the read, even if one of the 10 is true. So, in addition to the accuracy metrics below, I’ll also note the average number of mappings per read, where we’ll interpret lower to be better so long as it doesn’t have a negative effect on the other metrics — the metrics reported in the table as defined in the Wikipedia article here :

So, we see that all of the aligners (or mapper in the case of RapMap) seem to do reasonably well, with qasi-mapping putting up some very nice numbers. RapMap seems to report more mappings per read than STAR, but given its substantially higher recall, these extra mappings may be unavoidable if we’re also to recall the correct mapping. Bowtie 2 has a very nice precision and recall as well, but generates a markedly larger average number of hits-per-read than either STAR or the quasi-mapper. One potential explanation for this is that Bowtie2 is likely outputting some sub-optimal alignments as well. Unlike Bowtie1, there is no way to return all mappings in the best stratum, which is, essentially, what we want. So Bowtie 2 and RapMap seem, in some sense, comparably “accurate” here, but there is less ambiguity in the true positive alignment groups for RapMap (and it generates its result way, way, faster).

One final point is that all of the RapMap numbers given above are for the “quasi-mapping” algorithm, not the independent re-implementation of “pseudo-mapping”. The pre-print will have some more inclusive (and comprehensive) performance numbers; the results are similar in many regards, and both clearly out-perform the more traditional approaches (again, remember the not-quite-apples-to-apples comparison of mapping vs. aligning), but there may be some reasons to grant slight preference to quasi-mapping [2]We generally find that pseudo-mapping is similar in terms of speed, and in terms of many of the metrics we consider above, but yields a somewhat higher “hits-per-read” statistic than … Continue reading.

A bright future for “lightweight” mapping?

So, I hope you find quasi-alignment to be an interesting idea, and RapMap to be a useful tool. An obvious question is “where might a tool like this be useful?” Specifically, since we’re performing mapping and not alignment, we can’t really use this for things like e.g. variant detection (yet). Well, one place is exactly where such methods (at least with respect to RNA-seq) first originated — speeding up transcript quantifiaction; Salmon introduced “lightweight-alignment” then Kallisto introduced “pseudo-alignment” (both are “mapping” in the terminology used above). It’s a virtual certainty that “quasi-mapping” will make it into Salmon soon, and will provide a nice speed boost to the already “wicked fast” tool — it’s just a matter of settling on the RapMap API and doing the requisite testing. However, such a tool brings along capabilities that extend beyond quantification, and we have a few places we’re interested in applying these concepts. One immediately obvious application (to us, at least) is clustering, where one might very quickly find similar sequences by looking at shared multi-mapping reads. We’ve also had the realization that if we can reduce every query to a very small number of potential loci of origin in the span of a few minutes, we might be able to turn these mappings into alignments without too much extra overhead (in some sense, the mapping acts like an incredibly efficient, albeit potentially lossy filter). Since part of the quasi-mapping index is the un-molested reference (well, if you don’t consider concatenating things together separated by ‘$’ as a form of textual molestation), actually throwing an efficient (e.g. banded, bit-vector) alignment algorithm at the mapping loci shouldn’t be too hard. Further, it might be possible to speed even this step up. Since the quasi-mapping procedure trivially gives us useful information about regions of the reference that exactly map, this means that alignment to different positions sharing identical sequence may also be able to share alignment computation. We have quite a few other ideas as well, but I think I’ve gone on more than long enough for this post, so talking about those will have to wait for another day.

References

| ↑1 | though the name was suggested in a tweet at some point around the “Biology of Genomes” meeting in early May, along with a somewhat humous plot. I don’t remember the author of the tweet, but would like to credit the appropriate person with the name, so please let me know if you recall! edit: As pointed out by Valentine, it seems credit for this name goes to Stephen Turner, with the relevant tweet linked in the first comment below. |

|---|---|

| ↑2 | We generally find that pseudo-mapping is similar in terms of speed, and in terms of many of the metrics we consider above, but yields a somewhat higher “hits-per-read” statistic than quasi-mapping. We also checked this against Kallisto directly to ensure that it wasn’t just a quirk of our implementation |

Pretty sure this is the inspiration for the quasi-alignment name that you mention: https://twitter.com/genetics_blog/status/596062435582312448

Indeed; Thanks, Valentine!

Hi Rob,

I love the idea behind RapMap and look forward to your future developments! As a suggestion for the ‘conversion’ of mappings into alignments, we explored the idea of using ‘a priori knowledge’ for read alignment a few years ago with regard to targeted sequencing (specifically the HaloPlex technology).

Feel free to have a look: http://www.ncbi.nlm.nih.gov/pubmed/22581774.

Best,

René

Hi René,

Thanks for the kind words! Also, thanks for pointing us at this reference. We’re currently looking into this restricted alignment problem, and the initial results seem to be very promising indeed. There are, of course, a few standard techniques to improve alignment speed given certain restrictions (e.g. reported exact matches must participate in the optimal alignment), but it turns out there are a few other special conditions that allow for further acceleration in our particular context. We’ll be sure to check out your paper and see if any of the ideas you propose there can be applied in this context (I’d venture to guess they probably can!).

Thanks,

Rob