I’m kicking off this year with a rather different kind of post. Actually, I started writing this post some time ago (November 19, 2013!), if the draft log of WordPress is to be believed. I’d been working for some time on Sailfish — our tool for transcript quantification — and I wanted to write a post to lay out some of the many different ways that people characterized relative transcript abundance in RNA-seq experiments. Originally, the plan was for this to be for my own edification as well as to act as a nice little pointer to which people could refer when they were curious about the specifics of a particular measure, or wanted to know how different measures were related.

Needless to say, I never finished that post, and partly gave up on the idea of it since some other nice posts (like Harold Pimentel’s excellent “What the FPKM?” post) came along. However, I’ve decided to revive this post with a rather new idea in mind — to make it a “living” post. That is, I want to start out with the basics I planned to write before (i.e., what are the commonly used measures, what do they mean, and what are some of their benefits and shortcomings). Subsequently, however, I want to use this post as somewhat of a FAQ on transcript abundance measures and related questions. I find myself answering common questions about relative abundance fairly frequently (be it in the user group for a piece of our software, or on a forum such as BioStars). It would be nice to point to the answer here, if it exists, or to create it here otherwise.

Meeting the players

Estimating gene (or isoform) abundance from RNA-seq is a fairly active area of research and, like any active area, there are a slew of terms and ideas that are appear in the literature. However, one of the most basic questions is “how should one report estimates of transcript abundance?” Ideally, one would like an estimate that reflects the quantity we actually want to know (the underlying relative (more on this later) abundance of different transcripts), while remaining intuitive and comparable across experiments.

The literature contains a variety of different metrics that have been devised to serve this purpose. Below, I list some of the more popular measures:

- RPKM — Reads Per Kilobase per Million mapped reads

- FPKM — Fragments Per Kilobase per Million mapped reads

- TPM — Transcripts Per Million

- Read Count — Number of reads estimated to have originated from a transcript

- CPM — Counts Per Million

In reality, RPKM and FPKM are very similar. FPKM just accounts for the fact that fragments, not reads, are what are being drawn from transcripts. Thus, below, I will not delineate between RPKM and FPKM — rather, I will just refer to *PKM.

Let’s start out with some basics. Our underling RNA-seq sample consists of a collection of many transcripts. There are M distinct types or species of transcript. We denote by the total number of transcripts of type i. Clearly, the total number of transcripts in our sample is given by

. Now, here’s a crucial point; our RNA-seq experiment will sample fragments from this population of transcripts. The fact that our assay is sampling from a population has a few important implications.

First, in the absence of sampling biases, we expect to sample fragments from this population such that the number of fragments that we draw from each type of transcript i is proportional to the total number of sequenceable fragments that all transcripts of type i might produce. This implies that we will see many fragments from the most abundant types of transcripts, and many fewer fragments from less abundant transcripts (that’s good — otherwise it would be difficult to estimate abundance with this data). However, it also implies that (1) very rare transcript types may not generate any samples at all, dependent upon how deeply we sample and (2) the rate at which we expect to sample fragments from transcripts of a given type depends crucially on the length of these transcripts. For example, a single 200,000 nucleotide transcript is much more likely to generate fragments than a single 2,000 nucleotide transcript, even though there might be only a single copy of each in our underlying population. The length effect is inherent in the standard RNA-seq protocol, since we expect to sample fragments randomly across the entire length of underlying transcripts.

Second, the process itself produces relative abundance information. Given the fragments we generate during our experiment, we can say what the relative proportion of the different transcript types are, but we cannot immediately determine e.g., the actual number, , of transcripts of type i in the population. Moreover, we are not guaranteed to see fragments from everything that exists in our population — low abundance transcripts may go completely unsampled.

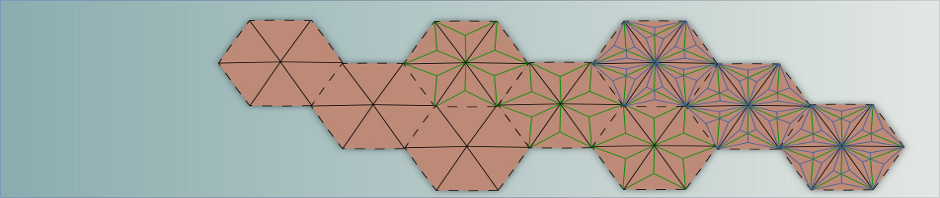

Given these considerations, we can now think a bit about how, given the fragments sampled in a specific RNA-seq experiment, we might derive estimators of the underlying abundances in which we’re interested. Consider the following quantities — here, I’m adopting terminology mostly from (Li, Bo, et al. “RNA-Seq gene expression estimation with read mapping uncertainty.” Bioinformatics 26.4 (2010): 493-500.) Let be the fraction of transcripts in the underlying population that are of type i. Recall that, since our assumption is that we sample from a transcript type proportional to the total number of sequenceable nucleotides for transcripts of that type, this is not the fraction of fragments we expect to derive from transcripts of type i. Instead, that fraction is given by

. Here, we multiply the count of each underlying type of transcript in the population by its effective length,

. The effective length is akin to the regular length of each transcript type (

), except that it accounts for the fact that not every transcript in the population can produce a fragment of every length starting at every position. Actually, a transcript has an effective length with respect to each possible fragment that maps to it. Consider a transcript of length

and a fragment mapping to this transcript with a length of

. Then, the effective length of this transcript with respect to this fragment is

. This is the number of places on transcript i that a fragment of length

could start, and the probability of any such position (assuming a uniform starting distribution) is just

.

However, it will generally be convenient to talk about the effective length of a transcript independent of any specific fragment. In that case, what we actually mean is the expected effective length of the transcript (the effective length, in expectation, over all fragments that might derive from it). This can be computed as , where

is the mean of the empirical fragment length distribution (the observed distribution of fragments in our sample) truncated to consider only fragments of maximum length

. Given these fundamental quantities of interest, we can now discuss how they relate to the quantities that are often reported by transcript quantification tools.

TPM and *PKM

The TPM metric is an estimator of . That is:

where is our estimate of the expected number of fragments deriving from transcripts of type i (determining this quantity is one of the fundamental challenges in RNA-seq quantification, and is the raison d’être for transcript quantification methods). It’s also the case that *PKM is proportional to TPM and, therefore, the transcript fractions. However, TPM is to be strictly preferred to *PKM, since *PKM contains an essentially arbitrary scaling factor (which is dependent on the average effective lengths of the transcripts in the underlying sample — see, e.g., this blog post). Though you shouldn’t use it, I’ll include the FPKM formula here for the sake of completeness:

where is the number of mapped or aligned reads in the sample.

Read Count

So, what about the raw (or estimated) read count? This is a very easy metric to interpret. Also, it is clearly related to the other metrics (e.g., it is the ) in the TPM equation above. However, as a measure of relative transcript abundance, it has two particular shortcomings. First, it is not normalized within sample, and so a read count of 100 in sample A may have a very different meaning from a read count of 100 in sample B (what if sample B has exactly the same composition as sample A, but is sequenced to twice the depth?). Of course, relative abundance measure cannot, in general, be directly compared across samples. However, the raw read counts are even “less” normalized than other standard metrics. Second, this metric is not normalized by length. Recall how I mentioned above that we expect, a priori, longer transcripts present in the same number to produce more sequenceable fragments than shorter transcripts. The read count, as a metric, doesn’t take this into account. So, transcripts present at the same underlying abundance may have drastically different read counts even within the same sample. While not a good metric for directly comparing or measuring the abundance of transcripts directly, the read count (or estimated read count) is the basis of many derived metrics that attempt to normalize for differences between samples. This is, in part, because the read count can be judged relative to the sequencing depth (the number of reads in the sample), and therefore one can derive reasonable scaling factors for this metric across samples.

Counts Per Million

The counts per million (CPM) metric takes the raw (or estimated) counts, and performs the first type of normalization I mention in the previous section. That is, it normalized the count by the library size, and then multiplies it by a million (to avoid scary small numbers). In equations:

Interestingly, the CPM metric is directly related to the nucleotide fraction mentioned earlier in this post. It essentially measures the rate at which we expect to sample reads from a particular type of transcript.

Frequently Asked Questions (FAQs)

Note: This section is starting out barebones, but I expect it to be updated early and often.

Can I compare TPM / *PKM / CPM across samples?

It depends what you mean by “compare”. Because these measures are purely relative, you cannot reliably use a metric like TPM to directly assess differences in transcript abundance across samples. Purely relative metrics are not suited for comparison across samples. Specifically, changes in the composition of a sample can lead to changes in TPM, even if transcripts are, in reality, expressed at the same true level across samples. Metrics like this can be useful for “high-level” comparisons (e.g., visualizing samples etc.). However, whenever using a relative metric like this, one should be aware of its relative nature and the potential caveats that go along with interpreting them between samples.

Does TPM account for “fragments”?

Yes. That is, a proper implementation of the TPM metric will account for fragments in the way that FPKM does in comparison to RPKM. Paired-end reads are treated as a single fragment, while in single-end libraries, each read is treated as a single fragment. I have answered this question in depth (with explanation) on Biostars, here.

What is the “effective length” of a transcript?

See the description earlier in this post