This past week was the 28th annual Biology of Genomes (BoG) meeting at Cold Spring Harbor. While I wasn’t able to attend the full meeting, I was able to attend on Wed. (the ability to be local and have access to the CSHL meetings is one of the huge benefits of being a faculty member at Stony Brook who is interested in Genomics). There was quite a bit of amazing work at BoG this year, but one of the posters was on a topic particularly close to my heart — rapid quantification from RNA-seq data.

Kallisto

Nick Bray, Harold Pimentel, Páll Melsted and Lior Pachter introduced a new method and tool, called kallisto for fast and accurate quantification of transcript-level abundance from RNA-seq data. I had a chance to drop by the poster session and speak with the first 3 authors, and also (in the last hour) to read the pre-print of the paper. First, I should say that I think the idea behind kallisto and the implementation of the software are excellent (Lior’s group has a history of developing very cool methods and backing these up with well-written, well-maintained software). Basically, they demonstrate that you can obtain the speed of a tool like Sailfish (which quantifies abundance based on k-mers and k-mer counts) without “shredding” the reads into k-mers, which can sometimes discard useful information contained therein. Along with an efficient implementation and (from what I can see so far, some very nice C++11 source code), these results are obtained mainly through the concept of pseudoalignments.

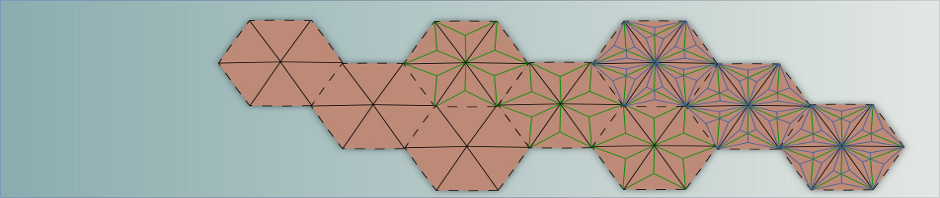

Essentially, pseudoalignments define a relationship between a read and a set of compatible transcripts (this relationship is computed based on “mapping” the k-mers to paths in a transcript De Bruijn graph). The pseudoalignment here gives us more information than the set of individual k-mers, since the k-mers in a read remain “coupled” when the read is assigned to a transcript equivalence class. As the pseudoalignments are generated, equivalence classes are computed and maintained — similar to the concept of equivalence classes among reads as introduced in the IsoEM paper or to equivalence classes among k-mers as used in Sailfish.

The results of kallisto are very impressive, and the idea of pseudoalignments, is, I think, a very good one (more on this below). Essentially, we are converging upon an answer to the question, “what information is really needed to obtain accurate quantification estimates?”, and trying to develop methods that use this information to compute answers as efficiently as possible (but no more efficiently ;P).

Salmon

One of the reasons kallisto and it’s ideas are so close to my heart is that, after the publication of Sailfish, Carl and I didn’t stop thinking about where the Sailfish model might fall short and how it might be improved. In a somewhat awesome twist of fate, while the kallisto team was in some sense “inspired” (or at least provoked to deep thought) by the approach of Sailfish, we were also inspired by some of the ideas introduced in Lior’s (and his previous student Adam Roberts’) prior RNA-seq quantification tool, eXpress.

One of the huge benefits of eXpress is that it incorporates a “streaming” inference algorithm. This means that abundance estimates are being computed continuously while alignments are being parsed (not just in “batch” iterations of an EM algorithm once all of the — potentially huge — data has been loaded into memory). To us, one of the intriguing things about the streaming inference model is that, since you consider how a particular read affects your statistical inference of transcript abundances when you parse the alignment, you can easily consider all of the relevant information you have up to that point (e.g. in terms of the sufficient statistics and auxiliary parameters like fragment length distribution and bias models) when you look at this alignment. For us, this presented a very elegant solution to the k-mer shredding problem. If we adopted an online inference algorithm, we could consider all of the information contained within a sequencing read at the time we read it from the file. Thus, even though we had an efficient hashing data structure based on k-mers, we could perform inference over reads without “shattering” or “shredding” them into these k-mers for the purposes of inference. This was the genesis of the idea for our Salmon software, which we started working on right around the time the Sailfish paper was published. Salmon has also been a radical experiment in “open development”, as we’ve been developing the method and source code in the open. The initial public commits for Salmon (after a short period — a few months — of initial private development) happened in the Sailfish repository in early June of 2014.

Lightweight alignment

One of the key concepts in Salmon is the notion of a lightweight alignment. It is similar in spirit, but different in practice from the psedoalignment idea of kallisto. We recognized that the key piece of information required for accurate quantification of transcript abundance from RNA-sequencing data using “reads” was the approximate locations where those reads occur. That is, I can perform very accurate quantification if I tell you the loci from which a read may have originated (with high probability) — much of the other information contained in the alignment, including the optimal alignment between the read and the corresponding transcript, is largely un-necessary.

Initially, in Salmon, we took an approach based on counting the intersection between the set of k-mers in a read and the set of transcripts. This allowed us to determine the transcripts to which reads “mapped” better, and also allowed us to take into account paired-end information. However, this approach also had some limitations. For k-mers to be efficient for this purpose, they have to be fairly long. Also, to be as robust as possible to errors, one often has to hash many overlapping k-mers which, while much faster than alignment can still take quite some time.

After some experimentation with the k-mer based approach, we moved on to another idea for lightweight alignment — chains of maximal exact matches (MEMs) and super maximal exact matches (SMEMs) — and this is the model that Salmon uses today. Let me explain this idea in a little more detail. The Salmon index is not a k-mer index, but a suffix array + BWT-based index (specifically, we use Heng Li’s excellent implementation of the FMD index — essentially equivalent to, but in some ways more efficient than, a bi-directional BWT index). Given this index, we can now efficiently discover shared substrings of any length between a read and the set of transcripts. This means that if a read maps end-to-end with no errors, we can find it quickly using the index without hashing and looking up all of the many overlapping k-mers — we can also stop the lookup early once an exact match is good enough to suggest that a read is compatible with a set of transcript locations.

However, if I have errors in my reads (or true variation between the reference and the sequenced sample) then there will be mismatches. This means we can’t expect all (or even most) of our reads to map end-to-end with no errors. We handle this problem efficiently through the use of “chains” of MEMs. That is, we compute the set of maximal exact matches between a read and the collection of transcripts. Each MEM has a position with respect to the read and a position with respect to the transcript. These MEMs can be “chained” together to form longer consistent, approximate matches between a read and a set of transcripts — these chains look like variable length exact matches interrupted by single mismatches or small indels.

These chains can be computed very efficiently, and they provide us much flexibility in determining what we mean by a “compatibility” between a read and a transcript. For example, by allowing small non-linearities in the positions of the MEMs in our chains, we can account for indels up to a desired length. If we have longer reads, we can also eliminate spurious hits by requiring that a sufficient fraction of the read is “covered” by exact matches in the chain. Finally, since we build these chains using the very versatile FMD index, we’re not restricted to any particular k-mer size. If we have particularly noisy reads, we can simply re-run Salmon with a more liberal “coverage” threshold and a smaller minimum MEM size (by default, very small MEMs are discarded since they are generally not useful). This makes the framework of lightweight alignment not only incredibly fast — in my most recent run of Salmon I was able to quantify 75 Million 76 bp paired-end reads in 2m 45s (albeit using 20 threads ;P) — but also very versatile. The size of the matches adapts seamlessly to the quality of the data. This leads to faster lightweight alignment when reads can be easily determined to be compatible with sets of transcripts, but sufficiently sensitive lightweight alignments when the read are noisy or there is significant variation with respect to the reference being used for quantification.

Another benefit of lightweight alignment is that, while it doesn’t give us the exact base-to-base mapping provided by traditional alignment, it does give us most of the relevant and useful information pertinent to transcript quantification. For example, not only do we get the set of transcript with which a read is compatible, but we also get the position within that transcript. We can use to model a non-uniform read start position distribution and, by looking at the reference sequence appearing upstream and downstream of the read start, we can also use this information to model sequence-specific bias in the reads.

It’s also with noting that although Salmon is already “wicked”-fast (a phrase I picked up in my one year attending college in Worcester Massachusetts), the work of the kallisto guys motivates me to make it even faster, and also gave me some ideas (e.g. supplementing the FMD-index with an auxiliary k-mer hash to quickly look up the BWT intervals for k-mers equal in size to the minimum allowed MEM length).

The rest of Salmon

Of course, lightweight alignment is only one of the key concepts that makes Salmon fast and accurate. In fact, you can even use Salmon without lightweight alignment. Some of the feedback we took to heart after the release of Sailfish is that

- “Actual” alignment can be fast (e.g. tools like STAR and HISAT can align hundreds of millions of reads an hour)

- Researchers often compute alignments for many reasons apart from quantification — no matter how fast lightweight alignment and pseudoalignment are, if you’re going to be aligning the reads anyway for other analyses (or simply because you don’t yet trust these fancy new “not-actually-alignment” methods) there is really no point in wasting CPU cycles to recapitulate the information that is already present in the BAM file

For this reason, Salmon can work equally well via lightweight or traditional alignments. If you don’t have alignments and wish to quickly quantify transcript abundance, build a Salmon index and we have you covered with lightweight alignment. If you already have your reads aligned, no problem, just pass us the BAM file and we’ll quantify abundance using that (even faster, of course, than if we had to compute the lightweight alignments ourselves). Under the hood, Salmon uses the same efficient inference engine whether you’re quantifying using lightweight or traditional alignments, which makes it, in many ways, a very versatile tool.

The other huge piece of Salmon is that efficient inference engine. As I mentioned above, Salmon uses an “online” or “streaming” approach to inference (in many ways similar to, but in important ways different from eXpress). The inference algorithm is a variant of Stochastic Collapsed Variational Bayesian Inference, but with some novel ideas (including, e.g., the use of efficient atomic updates along with massively-parallel asynchronous updates a la PASSCoDe-Atomic). It also implements a novel concept called “banking” to reduce, even further, the computational complexity of the inference procedure, and a lightweight alignment cache to avoid wasting time on the re-computation of lightweight alignments in case Salmon decides it needs to make more than one pass over the data (often times, a single pass through the data is sufficient to achieve convergence and accurate expression estimates!). Actually, there’s a lot more that I could say about Salmon‘s inference algorithm, but that’s fodder for another post

As a closing though — in his recent post on Kallisto, Lior muses:

However, the fact that simpler was so much faster led us to wonder whether the prevailing wisdom of seeking to improve RNA-Seq analysis by looking at increasingly complex models was ill-founded. Perhaps simpler could be not only fast, but also accurate, or at least close enough to best-in-class for practical purposes.

Salmon, in some sense, challenges the notion that we have to choose between a fast model and a complete one, that we have to consider a substantially simplified model to obtain very rapid inference. Because of the very useful information we get from lightweight alignments, and the fact that the asynchronous streaming inference algorithm allows us to evaluate arbitrarily sophisticated models (which will, no doubt, continue to be developed and of increasing utility) almost as quickly as we can read through the input file, it seems, actually, that we can have both! Why not give Salmon a try?

As you state that you can either use Salmon to do lightweight alignment or use an existing BAM file (eg from STAR), do you think it makes a difference in terms of quality? Do you get a sense that Salmon is better or worse at this than a mapper like Tophat or STAR?

Hi James,

That’s a great question. The answer, unfortunately, isn’t 100% trivial. We’ve analyzed a lot of data with Salmon, and I can tell you that generally, we see the following: (1) the differences between using BAMs and using quasi-mapping is usually small — by design (of quasi-mapping) they yield very similar results (2) when there is a non-trivial difference (rarely), and we know the “truth”, quasi-mapping usually does better than alignment; yet (3) there is a small number of scenarios where we’ve seen alignment produce similar but slightly-better results. My general recommendation would be to go with Salmon’s built-in mapping (which is now quasi-mapping by default) unless you have a compelling reason not to (e.g. the alignments were created with special, very specific parameters, or you need to use a feature that is currently only supported in alignment-based mode, like producing a sampled one-alignment-per-read .BAM file).

Great, thanks for the speedy reply! The only reason I would use BAM would be to save some some time as I already the BAM files. I am glad to know if there are any differences, they are likely to be small.

Hi,

I was wondering where are the differences between salmon and kallisto?

thanks

Assa

Hi Assa,

There are quite a few differences between these tools. They use a different concept and algorithm for mapping the reads, employ different inference strategies to try and determine the relative abundances, and have different models in which they are performing inference. You can find the details in the Salmon pre-print, but if you have specific questions, I’d be happy to answer them.

Hi Rob. Any thoughts on updating this in light of recent developments / publication on Salmon?

Hi Stephen. Thanks for stopping by the blog! I’m looking to start updating things more regularly here. Anyway, I’ll definitely consider updating this post (or writing a new & related one) in light of some of the more recent developments. Specifically, we have an ISMB paper this year (the second of our papers here — http://hitseq.org.s3-website-us-east-1.amazonaws.com/proceedings.html — not deBGR, though I might try to blog on that one as well), that is related to some of the modeling issues and tradeoffs in different equivalence-class decompositions of the quantification likelihood function. There’s a lot of interesting questions in this space, I think. The challenge (w.r.t a blog post) will be providing enough background to motivate and describe the relevant distinctions without basically recapitulating the whole paper ;P.